Tableau Data Governance

Establishing effective governance practices is essential in Tableau to promote the widespread use and acceptance of analytics, while ensuring data security and integrity. It is crucial to establish clear guidelines, streamlined processes, and policies to securely manage data and content throughout the Modern Analytics Workflow. Equally important is ensuring that all stakeholders within the workflow comprehend and adhere to these practices, fostering trust and confidence in the analytics used to drive data-informed decision-making.

To define your organization’s Tableau Governance Models, it is recommended to systematically address the aspects of data and content governance outlined in the diagram below. This can be accomplished by leveraging the Tableau Blueprint Planner, a comprehensive tool that aids in the development and implementation of effective governance strategies.

Data Governance in Tableau

Data governance within the Modern Analytics Workflow serves the vital objective of ensuring that appropriate data is readily accessible to the relevant individuals within an organization when they require it. Its primary function is to establish accountability and facilitate, rather than impede, access to secure and reliable content for users of varying skill levels. By implementing robust data governance practices, organizations can strike a balance between enabling access and maintaining the integrity and security of their data. This fosters a culture of trust and empowers users to make informed decisions based on accurate and dependable information.

Data Source Management

Data source management encompasses a set of processes that revolve around the careful selection and efficient distribution of data within your organization. Tableau seamlessly integrates with your enterprise data platforms, leveraging the existing governance measures you have in place for those systems. In a self-service environment, content authors and data stewards possess the ability to connect to a variety of data sources, construct and publish data sources, workbooks, and other content. Neglecting these processes can lead to an abundance of duplicate data sources, resulting in user confusion, an increased likelihood of errors, and unnecessary consumption of system resources.

Tableau’s hybrid data architecture offers two modes for interacting with data: live query and in-memory extract. Switching between these modes is as simple as selecting the appropriate option that suits your specific use case. In both live query and extract scenarios, users can seamlessly connect to existing data warehouse tables, views, and stored procedures without the need for additional effort.

Live queries are suitable when you have a high-performance database, require real-time data, or utilize Initial SQL. On the other hand, in-memory extracts are recommended when your database or network operates at a slower pace for interactive queries, to alleviate the load on transactional databases, or when offline data access is necessary.

With the introduction of a new multi-table logical layer and relationships in Tableau 2020.2, users are no longer confined to utilizing data from a single, flat, denormalized table in a Tableau Data Source. They now have the ability to build multi-table data sources with flexible relationships that are aware of the level of detail (LOD) between tables, without the need to predefine join types based on anticipated data inquiries. This enhanced support for multi-table data sources enables Tableau to directly represent common enterprise data models such as star and snowflake schemas, as well as more complex multi-fact models. Multiple levels of detail are supported within a single data source, thereby reducing the need for multiple data sources to represent the same information. Relationships offer greater flexibility compared to database joins and can adapt to emerging use cases, reducing the necessity to create new data models for addressing novel questions. Leveraging relationships within well-designed schemas not only reduces the time required to create a data model but also minimizes the number of data sources needed to answer business queries. For more information, please refer to the Metadata Management section and The Tableau Data Model.

When publishing a workbook to Tableau Server or Tableau Cloud, authors have the choice to either publish the data source separately or embed it within the workbook. The decision on whether to publish or embed the data source is governed by the data source management processes that you define. By utilizing Tableau Data Server, an integral component of the Tableau platform, you can easily share and reuse data models, ensure secure data access for your users, and effectively manage and consolidate extracts using Published Data Sources. Furthermore, Published Data Sources grant Tableau Creator- and Explorer-licensed users secure and trusted access to data for web authoring and Ask Data functionality. For more details, please consult the Best Practices for Published Data Sources, Edit Views on the Web, and Optimize Data for Ask Data resources.

With advanced data discovery capabilities, Tableau Catalog comprehensively indexes all content, including workbooks, data sources, and flows. This empowers authors to effortlessly search for specific fields, columns, databases, and tables within workbooks and published data sources. For further information, please refer to the Data Management section.

With the activation of Tableau Catalog, content authors gain the capability to perform Data Searches by choosing from a selection of Data Sources, Databases and Files, or Tables to determine if the desired data already resides in Tableau Server and Tableau Cloud. This functionality helps reduce the duplication of data sources and promotes efficient data management.

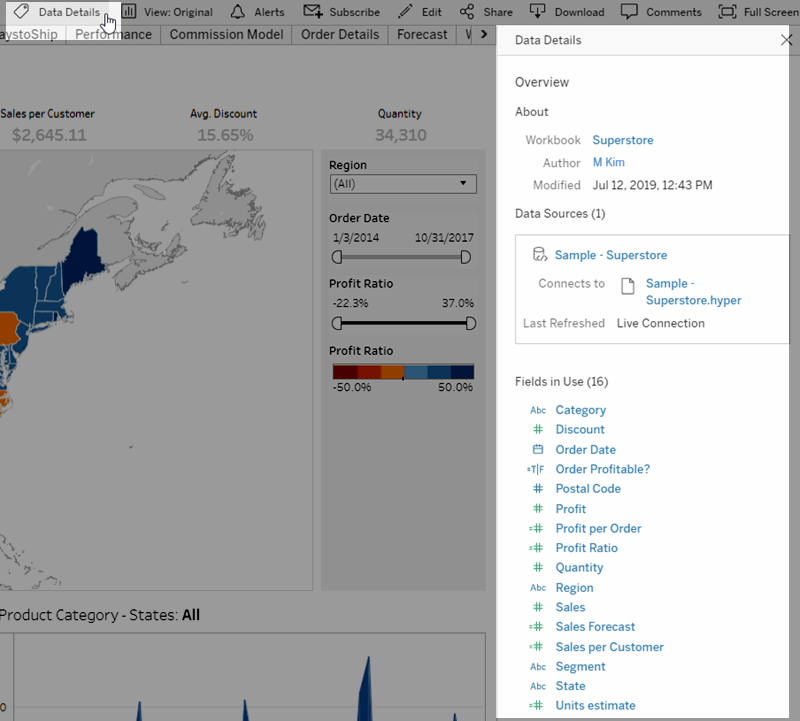

Furthermore, when a view is published to Tableau Server and Tableau Cloud, consumers will find a comprehensive Data Details tab that furnishes them with pertinent information regarding the data utilized in the view. This includes details about the workbook such as its name, author, and date modified, as well as the data sources employed in the view and a comprehensive list of the fields in use.

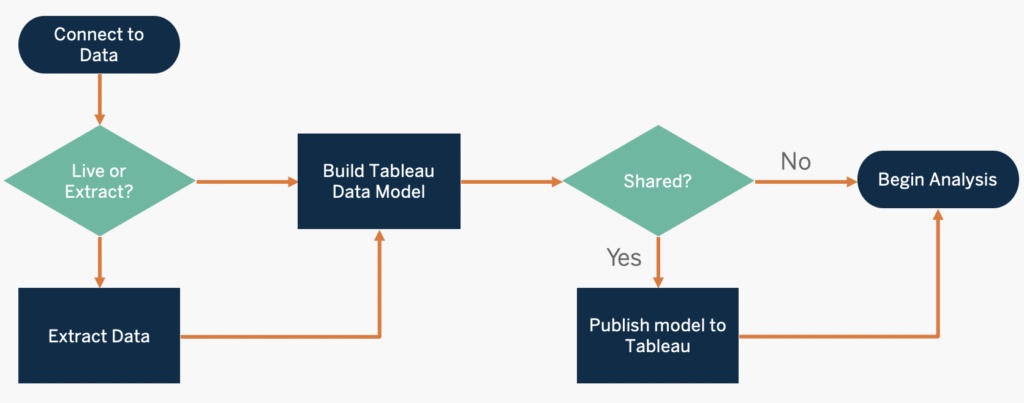

For data stewards responsible for creating new Published Data Sources, the following workflow outlines the two key decision points that significantly influence data source management: choosing between live or extract mode and deciding whether to use an embedded or shared data model. It’s important to note that this workflow does not suggest that a formal modeling process must always be conducted prior to initiating analysis.

To discover and prioritize key sources of data, use the Tableau Data and Analytics Survey and Tableau Use Cases and Data Sources tabs in the Tableau Blueprint Planner.

Data Quality

Data quality refers to the extent to which data is suitable for its intended purpose within a specific context, particularly when it comes to making business decisions. Various factors contribute to data quality, including accuracy, completeness, reliability, relevance, and freshness. It is likely that your organization already has processes in place to ensure data quality during the ingestion of data from source systems. The more emphasis placed on addressing data quality issues in the upstream processes, the less effort will be required to correct them during analysis. It is crucial to maintain consistent data quality throughout the entire data lifecycle, from its creation to its consumption.

During the planning phase, it is an opportune time to assess existing data quality checks that occur upstream. This is especially important as data will be made available to a broader user base in a self-service environment. Utilizing tools like Tableau Prep Builder and Tableau Desktop can greatly assist in identifying and resolving data quality issues. Establishing a process for reporting data quality concerns to the IT team or data stewards ensures that data quality becomes an integral part of building trust and confidence in the data.

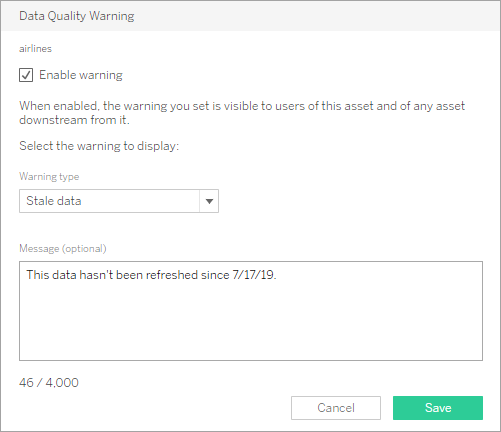

Tableau Data Management and Tableau Catalog offer effective means to communicate data quality issues to users, enhancing visibility and trust in the data. When a data quality problem arises, you have the ability to set a warning message on a specific data asset, alerting users to specific issues associated with that asset. For instance, you might want to inform users that the data has not been refreshed for two weeks or that a particular data source is no longer recommended. Each data asset, such as a data source, database, flow, or table, can have one data quality warning assigned to it. To learn more, refer to the Set a Data Quality Warning section, which covers various warning types including Warning, Deprecated, Stale Data, and Under Maintenance.

Note that you can set a data quality warning using REST API. For more information, see Add Data Quality Warning in the Tableau REST API Help.

Enrichment & Preparation

Enrichment and preparation encompass the procedures employed to enhance, refine, and ready raw data for analysis. It is often the case that a single data source alone cannot address all the inquiries a user may have. Incorporating data from diverse sources adds valuable context. Chances are, your organization already utilizes ETL processes to cleanse, integrate, aggregate, and store data when ingesting raw data from various sources. Tableau can seamlessly integrate with your existing processes through command line interfaces and APIs.

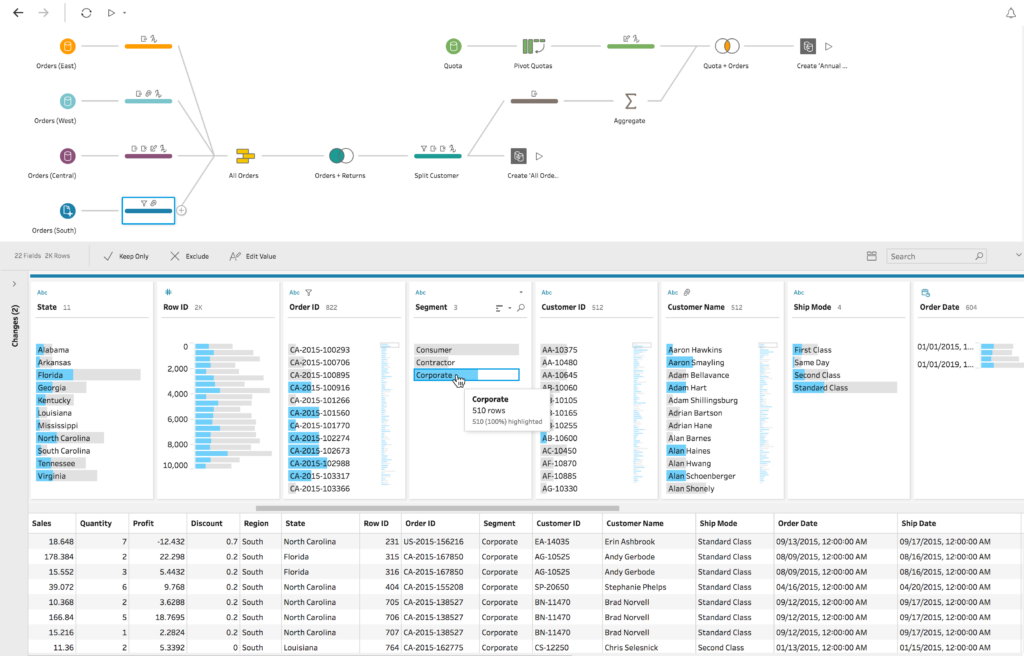

For self-service data preparation, the recommended tools to utilize are Tableau Prep Builder and Tableau Prep Conductor. These tools enable the combination of multiple data sources and offer the capability to automate these processes on a predefined schedule. Tableau Prep supports various output formats for Tableau Server or Tableau Cloud, including CSV, Hyper, TDE, and Published Data Sources. Starting from version 2020.3, Tableau Prep also provides the ability to save the results of a data flow directly to a table in a relational database. This means that the prepared data generated using Tableau Prep Builder can be stored and managed centrally, allowing for its widespread utilization within your organization. Tableau Prep Builder is included as part of the Tableau Creator license, while Tableau Prep Conductor is a component of Tableau Data Management. Tableau Data Management assists in effectively managing data within your analytics environment, covering areas such as data preparation, cataloging, search, and governance. This ensures that reliable and up-to-date data is consistently used to drive decision-making.

With Tableau Prep Builder, users are provided with visual, intelligent, and real-time feedback at each step of the data preparation process, allowing for prototyping and preparation of disparate data sources for analysis. Once the steps are defined and validated, the data flow should be published to Tableau Server or Tableau Cloud, where Prep Conductor takes charge of executing the flow and generating a Published Data Source based on the predetermined schedule. Automation ensures a consistent process, reduces the potential for manual errors, tracks the success or failure of operations, and saves valuable time. Users can have confidence in the output because the entire workflow can be reviewed on Tableau Server or Tableau Cloud.

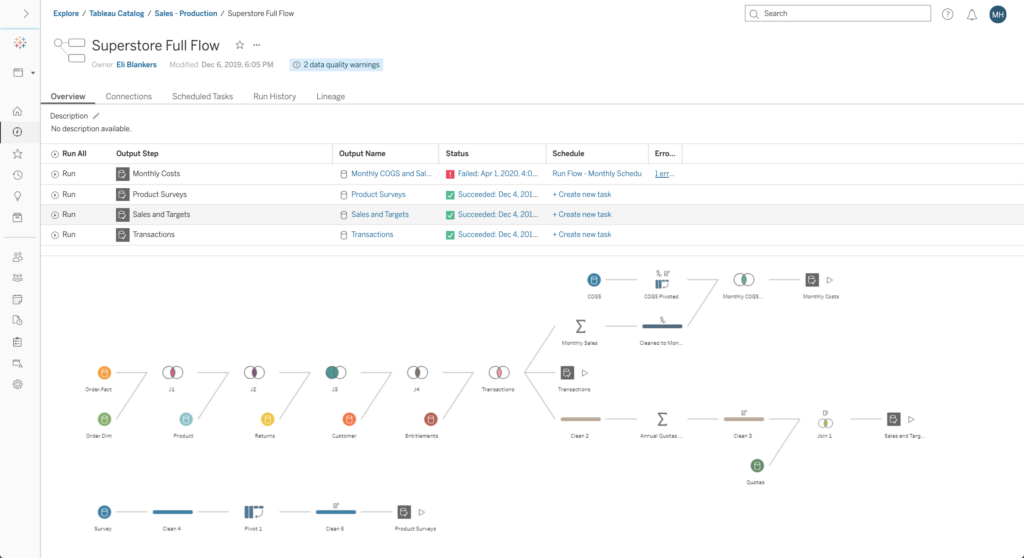

Tableau Prep Flow

Tableau Prep Flow in Tableau Server or Tableau Cloud

Data Security

Ensuring data security is paramount for every enterprise, and Tableau provides robust features to enhance existing security implementations. IT administrators have the flexibility to establish security measures at both the database level, using database authentication, and within Tableau itself, using permissions. Alternatively, a hybrid approach that combines both methods can be implemented based on the specific needs of the organization. Regardless of how users access the data—via published views on the web, mobile devices, Tableau Desktop, or Tableau Prep Builder—security measures are enforced. Many customers prefer the hybrid approach due to its versatility in accommodating various use cases. To begin, it is advisable to establish a data security classification framework that defines different data types and their corresponding levels of sensitivity within the organization.

When leveraging database security, it is crucial to consider the authentication method used for accessing the database. It’s important to note that the authentication performed when logging into Tableau Server or Tableau Cloud is separate from the authentication required to access the database. Therefore, Tableau Server and Tableau Cloud users must have their own set of credentials (username/password or service account username/password) to establish a connection to the database and apply the database-level security measures. In order to further protect data integrity, Tableau only requires read-access credentials for the database, preventing accidental modifications to the underlying data by publishers. In certain cases, granting the database user permission to create temporary tables can provide performance and security benefits since the temporary data is stored within the database instead of within Tableau. For Tableau Cloud, embedding credentials in the data source’s connection information is necessary to enable automatic refreshes. When dealing with Google and Salesforce.com data sources, OAuth 2.0 access tokens can be used as embedded credentials.

Extract encryption at rest is a crucial data security feature that allows you to encrypt .hyper extracts while they are stored on Tableau Server. Tableau Server administrators have the flexibility to enforce encryption for all extracts on the site or allow users to specify encryption for specific published workbooks or data sources. To learn more about this feature, refer to the Extract Encryption at Rest documentation.

If your organization is implementing Data Extract Encryption at Rest, you also have the option to configure Tableau Server to use AWS or Azure as the Key Management Service (KMS) for extract encryption. This requires deploying Tableau Server on AWS or Azure and being licensed for Advanced Management for Tableau Server. In the case of AWS, Tableau Server utilizes the AWS KMS customer master key (CMK) to generate an AWS data key, which serves as the root master key for all encrypted extracts. Similarly, in the Azure scenario, Tableau Server utilizes the Azure Key Vault to encrypt the root master key (RMK) for encrypted extracts. However, it’s important to note that even when integrating with AWS KMS or Azure KMS, the native Java keystore and local KMS are still used to securely store secrets on Tableau Server. AWS KMS or Azure KMS is exclusively used for encrypting the root master key of encrypted extracts. For more detailed information, refer to the Key Management System documentation.

For Tableau Cloud, data is encrypted at rest by default. By leveraging the Advanced Management for Tableau Cloud, you gain additional control over key rotation and auditing through the use of Customer-Managed Encryption Keys. These keys provide an added layer of security by allowing you to encrypt data extracts specific to your site using a customer-managed key. The Salesforce Key Management System (KMS) instance stores the default site-specific encryption key for those who enable encryption on a site. The encryption process follows a key hierarchy where Tableau Cloud encrypts an extract, and Tableau Cloud KMS checks its key caches for a suitable data key. If a key is not found, the KMS GenerateDataKey API generates one, based on the key policy associated with the key, and returns both the plaintext and encrypted copies of the data key to Tableau Cloud. Tableau Cloud utilizes the plaintext copy of the data key to encrypt the data and stores the encrypted copy of the key alongside the encrypted data.

To control which users can access specific data, you can implement user filters on data sources in both Tableau Server and Tableau Cloud. This enables you to have granular control over the data visible to users in published views based on their Tableau Server login account. By employing this technique, a regional manager, for example, can view data specific to their region while remaining unaware of data pertaining to other regional managers. With these robust data security approaches, you can publish views or dashboards that provide secure and personalized data analysis to a wide range of users on Tableau Cloud or Tableau Server. For more information on data security and restricting access at the data row level, refer to the Data Security and Restrict Access at the Data Row Level documentation. If row-level security is critical to your analytics use case, Tableau Data Management offers virtual connections with data policies that enable you to implement user filtering at scale. For a comprehensive understanding of virtual connections and data policies, consult the Virtual Connections and Data Policies documentation.

Metadata Management

Metadata management encompasses the policies and processes that ensure seamless access, sharing, analysis, and maintenance of information throughout the organization, serving as an extension of Data Source Management. Metadata refers to a user-friendly representation of data using common terms, akin to a semantic layer found in traditional business intelligence platforms. Curated data sources simplify the complexity of an organization’s modern data architecture, making fields easily understandable regardless of the data store or table they originate from.

Tableau employs a straightforward, refined, and robust metadata system that offers users flexibility while enabling enterprise-level metadata management. The Tableau Data Model can be integrated within a workbook or centrally managed as a Published Data Source using Data Server. Once data is connected and the Tableau Data Model is created, which will subsequently become a Published Data Source on either Tableau Server or Tableau Cloud, consider it from the perspective of your users. Visualize how analytics becomes more streamlined when they have a well-structured starting point, filtered and tailored to address specific business inquiries. For detailed information on Published Data Sources, refer to resources such as The Tableau Data Model, Best Practices for Published Data Sources, and Enabling Governed Data Access with Tableau Data Server.

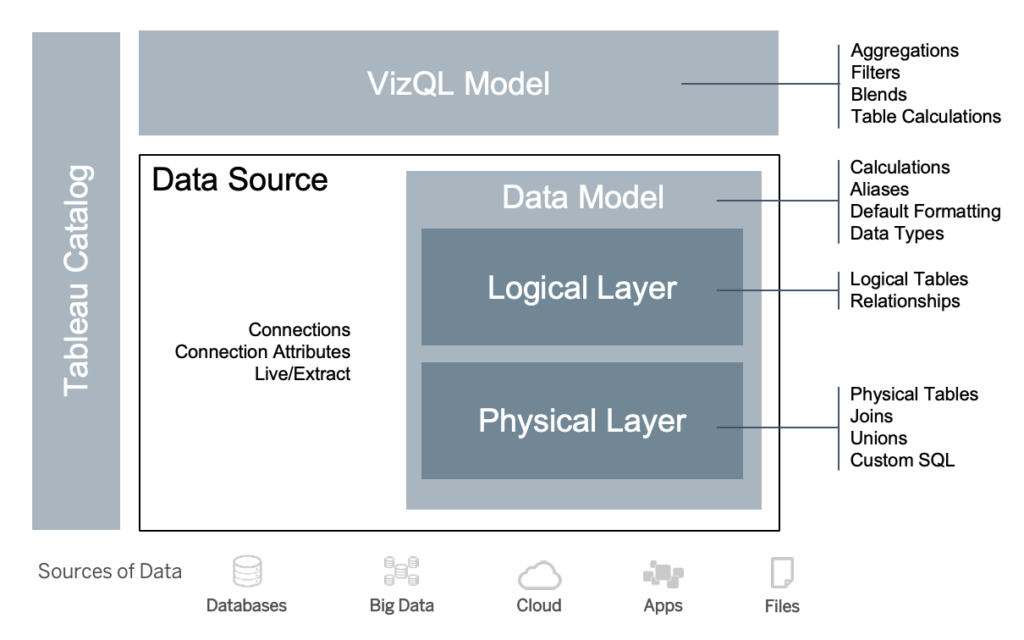

The diagram below illustrates the placement of various elements within the Tableau Data Model:

Starting from version 2020.2, the Data Source in Tableau encompasses the connection, connection attributes, and both the physical and logical layers within a Data Model. Upon establishing a connection, Tableau automatically categorizes fields as dimensions or measures. The Data Model also stores calculations, aliases, and formatting. The physical layer comprises physical tables defined through joins, unions, or custom SQL. Each group of one or more physical tables defines a logical table, residing in the logical layer alongside relationships.

Relationships introduce a more flexible approach to data modeling compared to traditional joins. They describe how two tables relate to each other based on common fields, without physically combining the tables. Utilizing relationships offers several advantages over joins:

- Join types between tables do not require explicit configuration. Selecting the fields to define the relationship is sufficient.

- Relationships employ automatic joins, deferring the selection of join types to the analysis phase and adapting to the specific context.

- Tableau leverages relationships to generate accurate aggregations and appropriate joins during analysis, based on the current field context within a worksheet.

- Multiple tables at different levels of detail can coexist within a single data source, reducing the need for multiple data sources to represent the same data.

- Unmatched measure values are preserved, preventing unintended data loss.

- Tableau optimizes query generation, retrieving only relevant data for the current view.

- At runtime in the VizQL model, queries are dynamically generated based on dimensions, measures, filters, and aggregations. The separate logical tables provide contextual information for determining appropriate joins and aggregations, allowing users to design the Data Source without anticipating all possible analysis variations.

- Tableau Catalog indexes and discovers all content within Tableau, including workbooks, data sources, sheets, and flows.

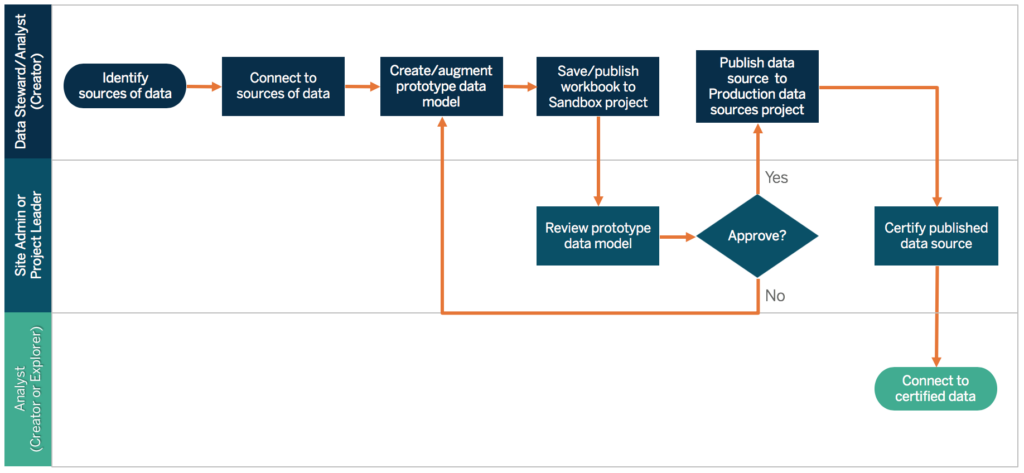

For data stewards or authors with direct data access, it is recommended to prototype data sources as embedded data sources within a Tableau workbook. Subsequently, a Published Data Source can be created in Tableau to share the curated Tableau Data Model. This workflow ensures the seamless sharing and utilization of the curated model. Refer to the diagram below to visualize the direct access workflow:

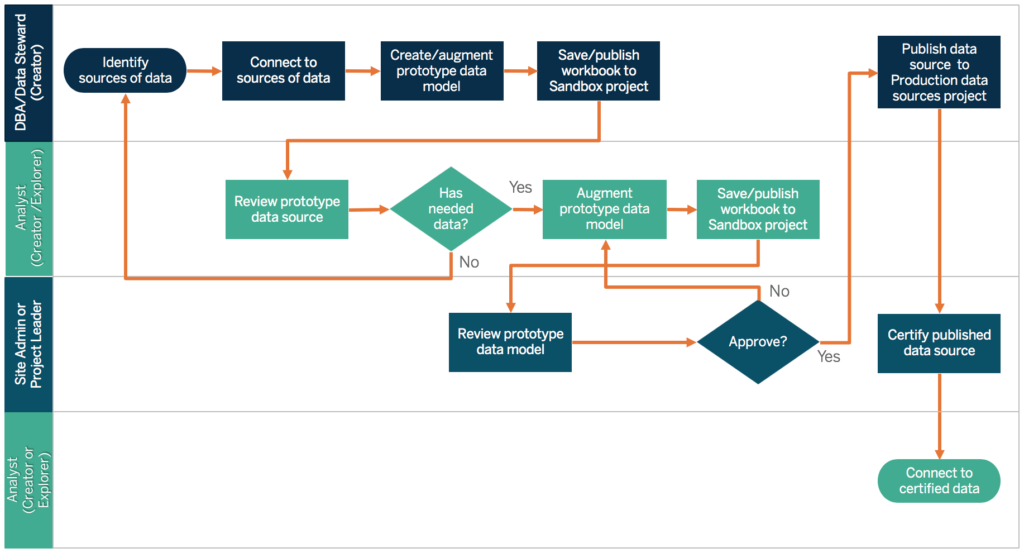

In cases where authors lack direct access to data sources, they will depend on a Database Administrator (DBA) or data steward to supply them with a prototype data source embedded in a Tableau workbook. Once the authors have reviewed and confirmed that the prototype contains the required data, a Site Administrator or Project Leader takes charge of creating a Published Data Source in Tableau. This Published Data Source serves as a means to share the Tableau Data Model. The workflow for restricted access is illustrated below:

The metadata checklist serves as a guide to ensure optimal curation of a Published Data Source. By adhering to data standards outlined in the checklist, you empower the business with well-governed, self-service access to data that is both user-friendly and easily comprehensible. Before creating an extract or Published Data Source in Tableau, it is recommended to carefully review and apply the following checklist to the Tableau Data Model:

- Validate the data model.

- Filter and size the data to suit the specific analysis.

- Utilize standard and user-friendly naming conventions.

- Incorporate field name synonyms and custom suggestions for Ask Data.

- Create hierarchies to establish drill paths.

- Define appropriate data types.

- Apply formatting for dates and numbers.

- Specify the start date for the fiscal year, if applicable.

- Introduce new calculations.

- Remove duplicate or test calculations.

- Provide field descriptions as comments.

- Aggregate the data to the highest level required.

- Hide unused fields to declutter the view.

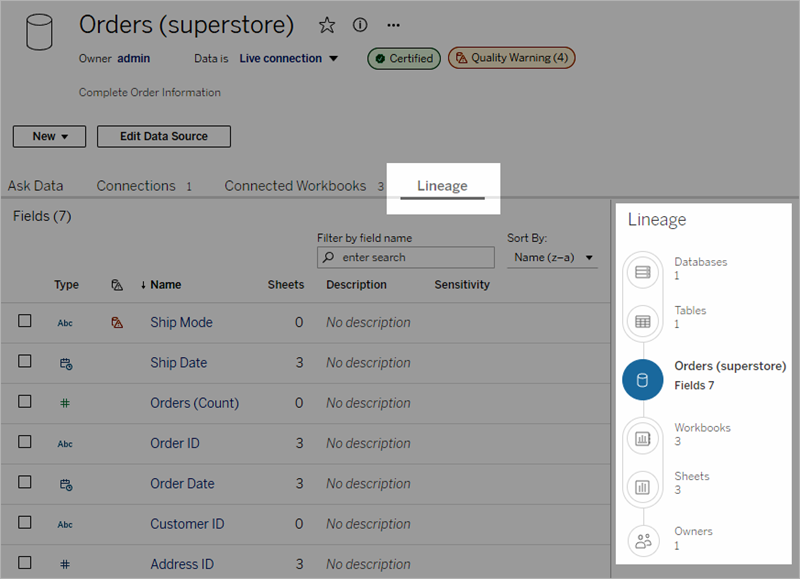

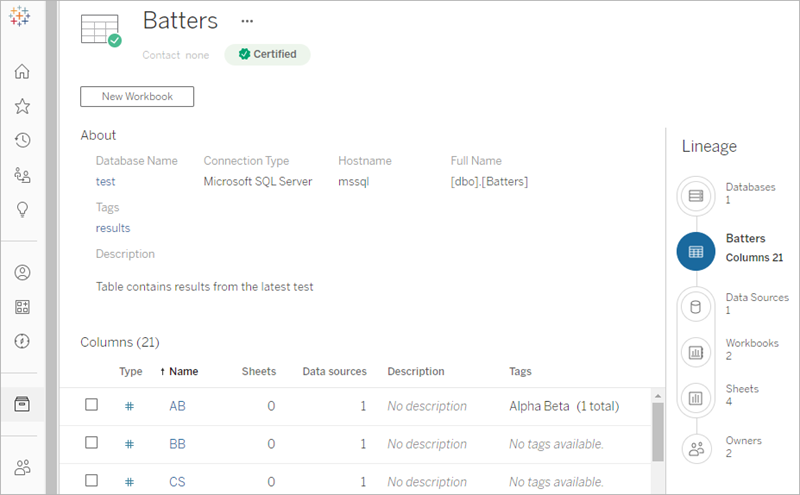

Starting from version 2019.3 in Data Management, Tableau Catalog is capable of discovering and indexing all content within Tableau, including workbooks, data sources, sheets, and flows. Through indexing, Tableau Catalog collects metadata, schemas, and lineage information associated with the content. By analyzing this metadata, Tableau Catalog identifies the databases, files, and tables utilized by the content on your Tableau Server or Tableau Cloud site. Understanding the origins of your data is crucial for establishing trust, and being aware of other users who access it enables you to assess the impact of data changes in your environment. The lineage feature in Tableau Catalog covers both internal and external content and can be used for impact analysis. For more detailed information, please refer to the Use Lineage for Impact Analysis documentation.

With lineage, you have the ability to trace the lineage graph all the way down to the content owners. This includes individuals assigned as owners of workbooks, data sources, or flows, as well as those designated as contacts for databases or tables within the lineage. When changes are planned, you can easily notify the owners via email to keep them informed about the potential impact. For additional details, please refer to the Use email to contact owners documentation.

Monitoring & Management

Monitoring plays a vital role in the self-service model, enabling IT and administrators to gain insights into data usage and proactively address performance, connectivity, and refresh issues. To ensure smooth operations, IT departments utilize a combination of tools and job schedulers aligned with their database standards to handle data ingestion and monitoring, as well as to maintain server health.

Just as business users leverage data for informed decision-making, administrators also rely on data-driven insights to make informed choices regarding their Tableau deployment. Tableau Server provides default administrative views and the ability to create custom administrative views, allowing Server and Site Administrators to monitor extract refresh status, data source utilization, and subscription and alert delivery. Additionally, custom administrative views can be generated using Tableau Server’s repository data. In Tableau Cloud, Site Administrators can utilize default administrative views to monitor site activity and leverage Admin Insights to create personalized views. For more detailed information, please refer to the documentation on Tableau Monitoring, Measurement of Tableau User Engagement and Adoption, and the utilization of Admin Insights.